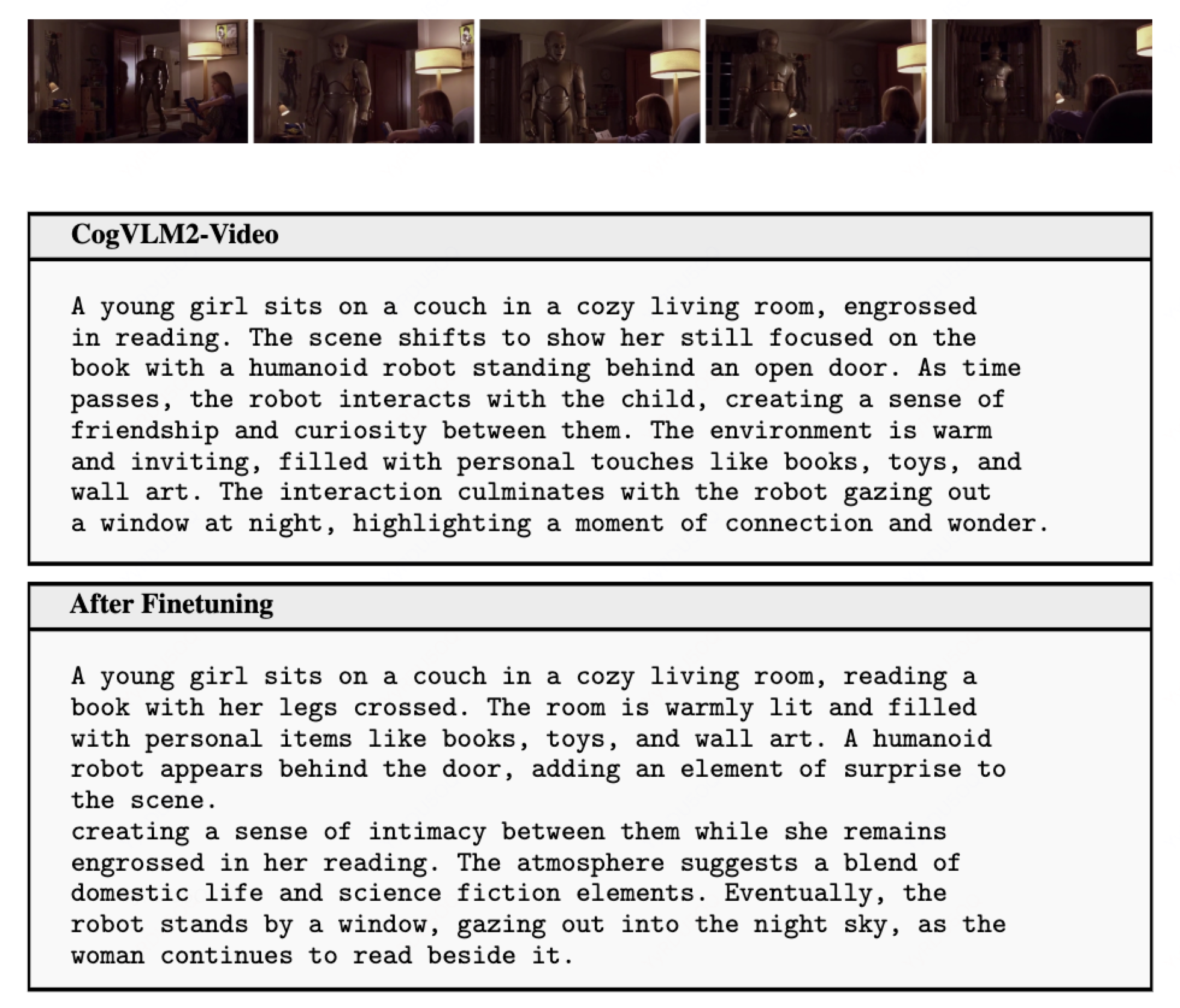

As shown in figure, the comparison reveals that the fine-tuned model is capable of generating more detailed and comprehensive descriptions of object movements and interactions.

@misc{wang2024lvbench,

title={Capturing Motion: Fine-Tuning Video Captioning Models with Dynamic Action Data},

author={Zhiqin Fang and Lefan Wang and Zhuoyi Yang and Jiayan Teng and Yean Cheng and Shiyu Huang and Yuxiao Dong and Zhaofeng He and Jie Tang},

year={2024},

eprint={2406.08035},

archivePrefix={arXiv},

primaryClass={cs.CV}

}